The Illustrated GPT-2 (Visualizing Transformer Language Models) – Jay Alammar – Visualizing machine learning one concept at a time.

The Illustrated GPT-2 (Visualizing Transformer Language Models) – Jay Alammar – Visualizing machine learning one concept at a time.

The Illustrated GPT-2 (Visualizing Transformer Language Models) – Jay Alammar – Visualizing machine learning one concept at a time.

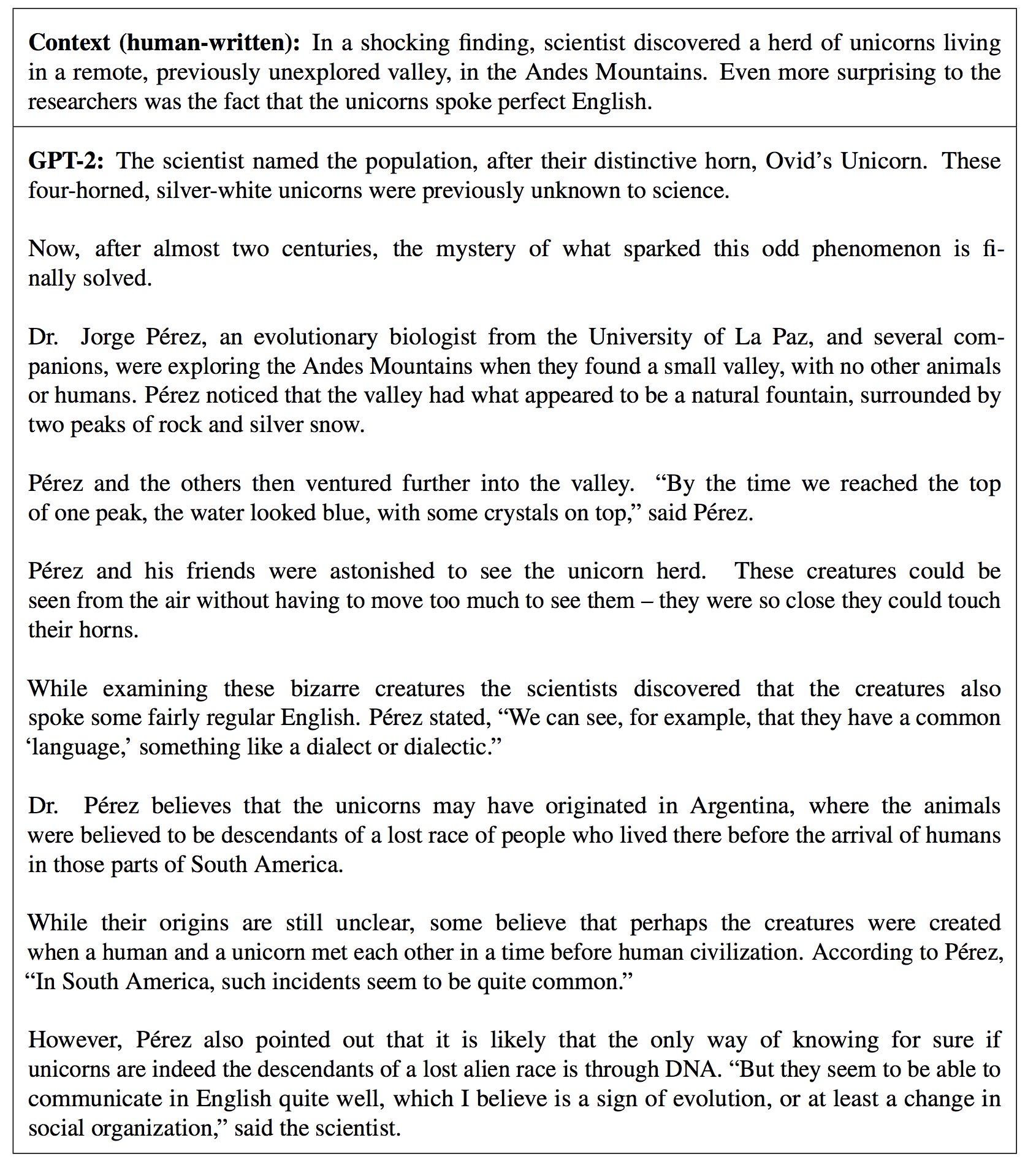

Ryan Lowe on Twitter: "Here's a ridiculous result from the @OpenAI GPT-2 paper (Table 13) that might get buried --- the model makes up an entire, coherent news article about TALKING UNICORNS,

The Illustrated GPT-2 (Visualizing Transformer Language Models) – Jay Alammar – Visualizing machine learning one concept at a time.

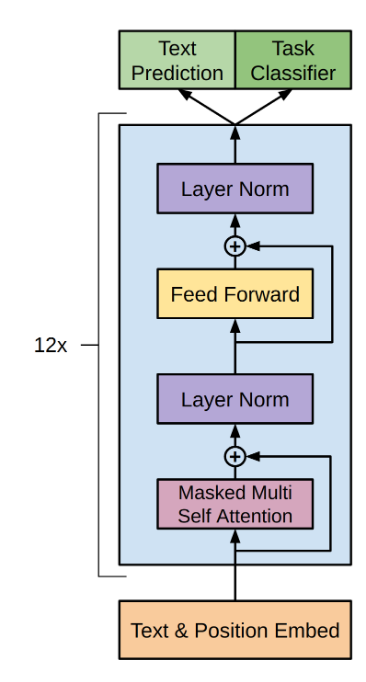

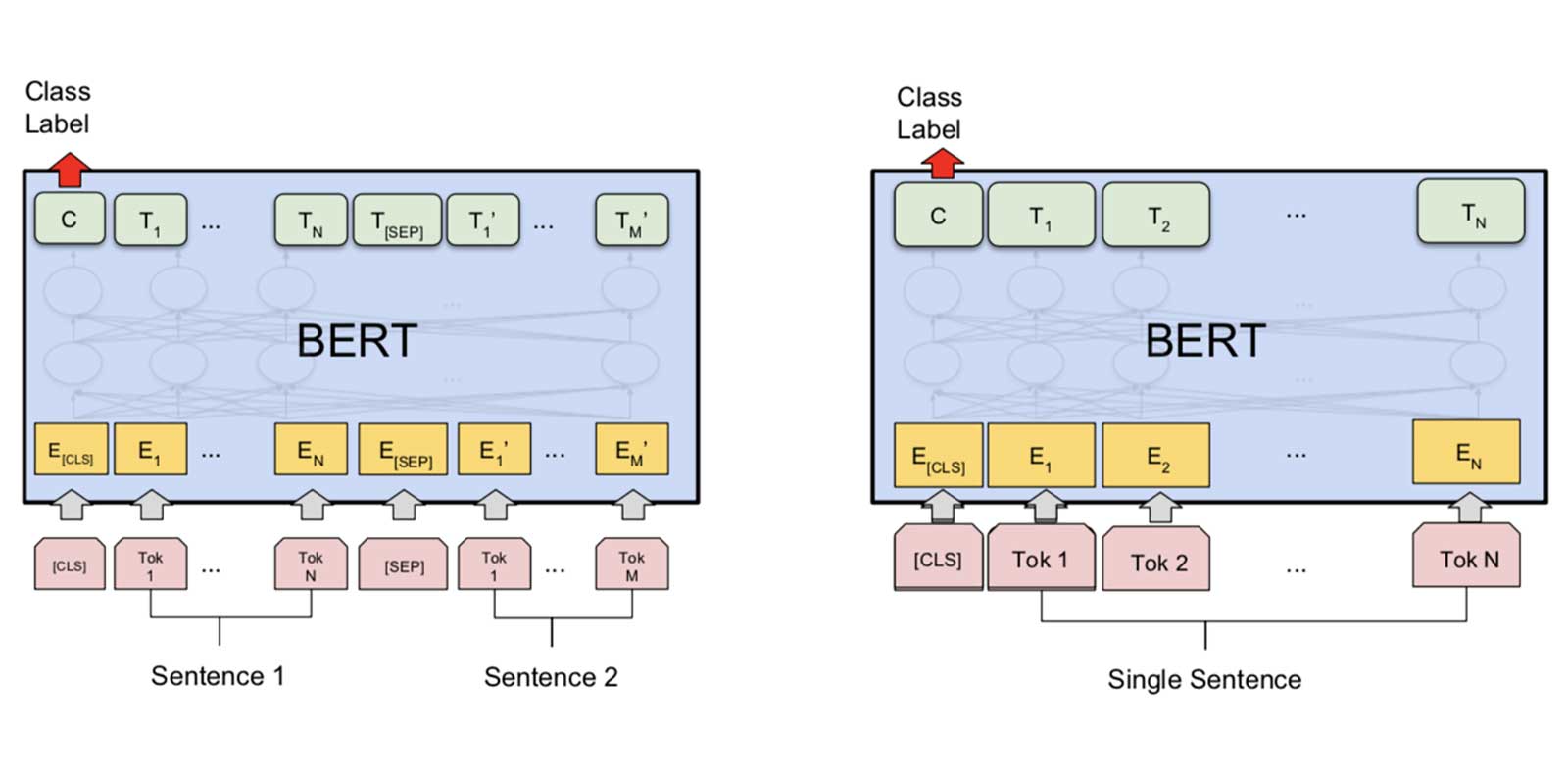

![PDF] Learning to Answer by Learning to Ask: Getting the Best of GPT-2 and BERT Worlds | Semantic Scholar PDF] Learning to Answer by Learning to Ask: Getting the Best of GPT-2 and BERT Worlds | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/c1ac3fbf530bf2eb207aa1a20dd14c8ed9f6766b/2-Figure1-1.png)

PDF] Learning to Answer by Learning to Ask: Getting the Best of GPT-2 and BERT Worlds | Semantic Scholar

The Illustrated GPT-2 (Visualizing Transformer Language Models) – Jay Alammar – Visualizing machine learning one concept at a time.

The Illustrated GPT-2 (Visualizing Transformer Language Models) – Jay Alammar – Visualizing machine learning one concept at a time.

OpenAI's GPT-2 Explained | Visualizing Transformer Language Models | Generative Pre-Training | GPT 3 - YouTube

/img/iea/nWOVJq21Oo/openai-gpt2-abstracts.jpg)